A Glimpse into the World of Accessibility

Get ready for the journey into the world of accessibility and their impact on user experience

I still vividly remember the first time I witnessed a visually impaired person interacting with the accessibility features on an iPhone. I had the valuable opportunity to participate in user research testing a navigation app specifically designed for visually impaired individuals. Some participants had been blind since birth, while others could see the screen if they held it very close to their face.

As a non-disabled person, I had always held the perception that individuals with disabilities required constant support and assistance. Consequently, I approached the research with utmost care, ensuring that the participants felt comfortable and safe. However, what truly astonished me was the level of independence and confidence they demonstrated. In their world, they were not "disabled"; they were complete individuals who skilfully utilised their available resources to achieve their goals. Reflecting back, I felt a sense of embarrassment realising that I may have unintentionally treated them like young children, providing explicit instructions and over-communicating. While my intentions were well-meaning, I recognised that I may have inadvertently conveyed an unconscious bias, suggesting a lack of competence on their part.

That first-hand experience opened my eyes to the power of accessibility features. Unlike user research with non-disabled participants, the testing process needed to be personalised. I observed participants configuring language settings, adjusting voiceover speeds, and employing unique gestures, such as using two, three, or even four fingers to interact with the screen. How often do we touch our screens with four fingers simultaneously? For them, it was a shortcut to quickly navigate from the top of the screen to the bottom, allowing them to reach call-to-action buttons typically located at the end of a page. It reminded me of how we scroll all the way down to find the "Agree to Terms" button on a lengthy webpage.

VoiceOver by Apple

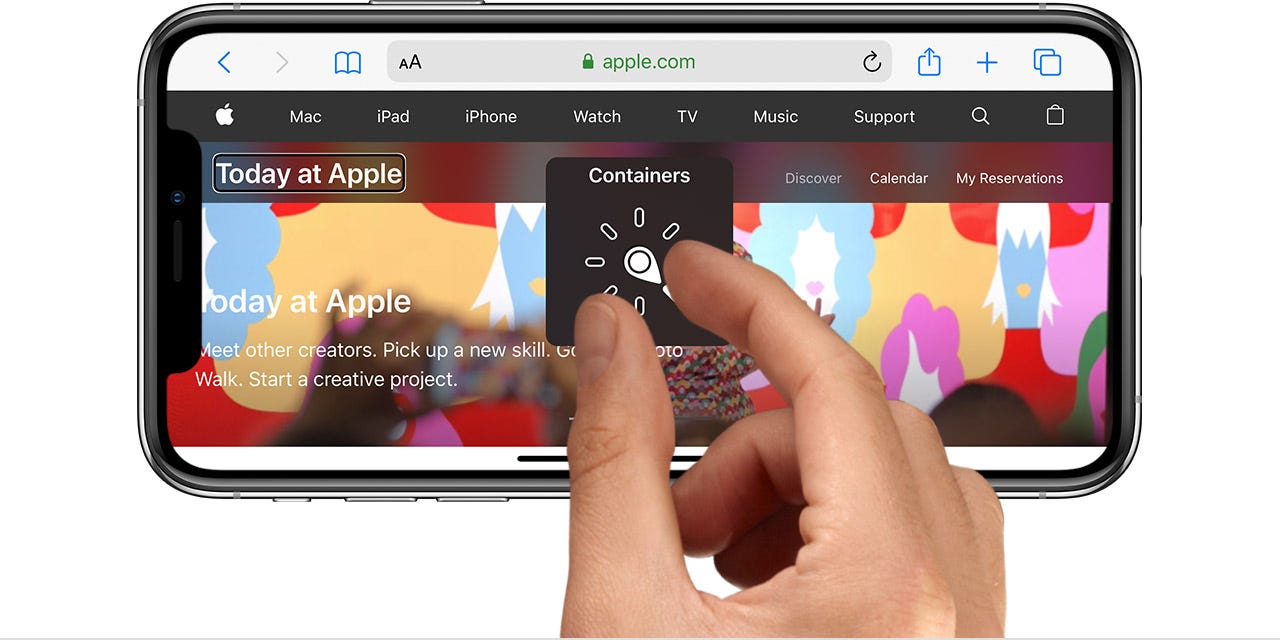

The specific gesture mentioned is a feature of Apple's VoiceOver, introduced in 2009 with the iPhone 3Gs. Prior to that, there were no accessibility features available. VoiceOver is a gesture-based screen reader that enables users to utilise their iPhones even if they can't see the screen. It provides audible descriptions of on-screen content, allowing users to customise the speaking rate and pitch to suit their needs. Users also learn specific gestures, such as double-tapping to open an app or using two-finger flicks for navigating. Typing on the virtual keyboard involves tapping a key and then double-tapping. VoiceOver also includes a contextual menu-like interface device called the rotor. VoiceOver comes pre-installed on Apple devices as part of their application suite and receives regular updates with improvements and new features through iOS updates.

Talkback by Android

While Apple is often associated with leading accessibility initiatives, Google also joined the movement in 2009 by introducing its accessibility features. They unveiled TalkBack, an open-source Android screen reader. TalkBack offers both non-spoken auditory and haptic feedback. For text, including time and notifications, the screen reader precisely vocalises the content, even spelling out characters in web addresses. When interacting with elements that require action, TalkBack informs users about their touch input and allows them to perform actions with a double tap or move to the next element without triggering unintended actions. Users can customise settings such as vibration, audible tones for highlighted items, and more. TalkBack comes pre-installed on Android devices as part of Google's Android application suite and receives regular updates through Google Play, introducing improvements and new features.

Nokia Screen Reader by Symbian

Since then, other operating systems have also developed their accessibility features. One notable example is Symbian, used in Nokia and Sony Ericsson devices. Symbian introduced its accessibility features in October 2011 at Nokia World 2011 in London. Nokia announced a free mobile screen reader called NSR, designed for blind and visually disabled individuals. NSR offers similar functionality to Mobile Speak but with fewer options for customisation, such as limited voice quality selections (standard or high quality Nokia voices). Interestingly, due to its hardware design, Nokia devices provide four touching modes: Keypad mode, Joystick mode, Review Cursor mode, and Stylus mode. NSR is not pre-installed on Nokia devices; users need to download it from the Nokia store, which may require sighted assistance. Unfortunately, Symbian discontinued in 2012.

In the upcoming article, I will delve deeper into the design and user experience aspects of these accessibility features across different platforms. Stay tuned for an exploration of how these features shape inclusive digital experiences for all users.

Read more:

Apple Announces the New iPhone 3GS — The Fastest, Most Powerful iPhone Yet

TalkBack: An Open Source Screenreader For Android — https://opensource.googleblog.com/2009/10/talkback-open-source-screenreader-for.html

Review: NSR Reader — http://www.allaboutsymbian.com/reviews/item/14578_NSR_Reader.php

A Timeline of iOS Accessibility: It Started with 36 Seconds https://www.macstories.net/stories/a-timeline-of-ios-accessibility-it-started-with-36-seconds/#:~:text=Apple's%20Mac%20screen%20reader%20was,available%20from%20a%20third%20party